Keep learning forward

To be updated ...#TIL : Runing old java applets on brower

Mostly morden browser has stop support Java plugins, so you can't run Java applet on browser.

Temporary way :

- run in IE or Safari

- run in an old Firefox (version 23)

And what if old java applet can't be runned on Java 8 because of weak signature algorithm. Try this

- Open

java.securityfile :- In MacOS, located in

/Library/Java/JavaVirtualMachines/jdk[jdk-version].jdk/Contents/Home/jre/lib/security - In Windows, located in

C:\Program File x86\Java\jre\lib\security

- In MacOS, located in

- Comment this line,

jdk.certpath.disabledAlgorithms=MD2, MD5, RSA keySize < 1024 - Rerun applet

#TIL : realpath function

If you pass a non-exists path to function

realpath, it returns empty string. So please don't do something like :function storage_path($folder) { return realpath(__DIR__.'/storage/'.$folder); }if you expect it return full path of new folder !

#TIL : Cleaning up old linux kernels

Last day, I try to reboot a production server which has out-of-space /boot (I upgraded many kernels without rebooting, so system doesn't clean up old ones). And in the end, doom day had come ! It installed new kernel failed and booting to that kernel. My system crashed !

So, I learned from it :

- Never ever upgrade kernel without cleaning up old ones (just reboot)

- Never ever reboot a production without backup

- MORE IMPORTANT, NEVER do 2 above things at same time in the weekend !!!

Solution :

Check current kernel :

uname -rList all kernels :

dpkg --list | grep linux-imageRemove a kernel :

sudo apt-get purge linux-image-x.x.x-x-genericFinally, update grub after removing all old kernels :

sudo update-grub2YOLO command for DEBIAN distros (to remove all of old kernels in 1 line), from AskUbuntu

dpkg --list | grep linux-image | awk '{ print $2 }' | sort -V | sed -n '/'`uname -r`'/q;p' | xargs sudo apt-get -y purgeTHEN,

sudo reboot#TIL : HTTP2 supported for python requests library

The sophisticated http client in Python is

requests, it has simple API but powerful features. You can use it for crawling, sending request to third-party API or writing tests.Btw, at this moment it doesn't support HTTP/2 protocol (actually we often doesn't need its

Server PushorMulti resource streamfeatures). But sometime the API endpoint only supports HTTP/2 like Akamai Load Balacing service.The hero is new library named

hyper, it has been developing to support full HTTP/2 specs. But if all we need is requesting single request to a HTTP/2 server. It works like a charm.Installation

$ pip install requests $ pip install hyperUsage

import requests from hyper.contrib import HTTP20Adapter s = requests.Session() s.mount('https://', HTTP20Adapter()) r = s.get('https://cloudflare.com/') print(r.status_code) print(r.url)This mean any url has prefix

https://will be hanlded by HTTP20Adaper of hyper libraryNotice

If you run above example, you will see the result

200 https://cloudflare.com/While you expected it would auto-follow redirect to the page

https://www.cloudflare.com/We can fix it by using the newer version than

0.7.0to fix the header key bytestring issue$ pip uninstall hyper $ pip install https://github.com/Lukasa/hyper/archive/development.zipThen try it out !!!

#TIL : Free sandbox server for development

We can use Heroku as a forever-free sandbox solution for testing or hosting micro service. Adding a credit card to have 1000 free computing hours.

Heroku will make a service down if no received request come. We can use a cronjob-like service to check service health and keep it live !!! ;)

Cronjob check health SASS : pingdom, statuscake, port-monitor, uptimerobot

Btw, I don't recommend you keep service live but no use, it makes Heroku infrastructure heavy and THAT'S NOT FAIR for them !

#TIL : Gearman bash worker and client

Gearman is a awesome job queue service that helps you scale your system. In smaller context, it can help us to run a background woker for minor tasks like backup data, cleaning system.

Install :

$ sudo apt install gearman-job-server gearman-toolsCreate a worker bash script

#!/bin/bash echo $1 echo $2Run worker,

-wmeans run as worker mode ,-f testmeans function name will betest$ chmod +x worker.sh $ gearman -w -f test xargs ./worker.shSending job

$ gearman -f test "hello" "hogehoge"Sending background job

$ gearman -b -f test "hello" "hogehoge"#TIL : Resolving conflict like a boss

When using git merge new branch to old branch, you just want use all

oursortheirsversion but be lazy to update every conflicted file.grep -lr '<<<<<<<' . | xargs git checkout --oursOr

grep -lr '<<<<<<<' . | xargs git checkout --theirsExplain : these commands will find any file contains

<<<<<<<string (conflicted file) and rungit checkout --[side]#TIL : Reducing docker image the right way

When building an image, Docker engine commit file system layer on every command (RUN, ADD, COPY). So next time you installing packages from package manager likes apt, yum, pacman, ...remember clean their cache in same line.

BAD WAY

RUN apt-get update RUN apt-get install git # Something here # End of file RUN apt-get clean && rm -rf /var/lib/apt/lists/* /tmp/* /var/tmp/*RIGHT WAY

RUN apt-get update && apt-get install -y git zip unzip && apt-get clean && rm -rf /var/lib/apt/lists/* /tmp/* /var/tmp/*#TIL : Changing channel from alpha to stable will remove ALL DATA

On MacOS, changing Docker channel will remove all data (includes volumes, images, networks and ... everything).

Because Docker on Mac using a minimal Linux machine to host docker engine, so changing machine means discarding all old data. So BECAREFUL !

#TIL : zcat : decompressing pipe tool

zcatis a tool that creates a pipe from gz file. It makes command cleaner and faster (maybe). You don't have to decompress gz file before using next tool.Examples :

Finding string in gzip text file

$ zcat secret.gz | grep '42'Importing SQL backup file

$ mysqldump -u root -p db_name1 | gzip > db_name.sql.gz $ zcat db_name.sql.gz | mysql -u root -p db_name_2#TIL : Using BSD find util to find and exec command on file and folder

Simple syntax of find

$ find [find_path] -type [file_type] -exec [command] {} \;Add filename matching pattern to filter the result

$ find [find_path] -name "*.php" -type [file_type] -exec [command] {} \;Where

file_typeis :- b : block special

- c : character special

- d : directory

- f : regular file

- l : symbolic link

- p : FIFO

- s : socket

Examples:

Fix common file and directory permissions

$ find . -type f -exec chmod 644 {} \; $ find . -type d -exec chmod 755 {} \;Check syntax all PHP files

$ find . -type f -name "*.php" -exec php -l {} \; | grep -v 'No syntax errors detected'Removed all log files

$ find . -type f -name "*.log" -exec rm -f {} \;WANT MORE ???

$ man find#TIL : wget Output flag

-Omeans output$ # output file will be index.html or based on header filename $ wget -O www.abc.xyz$ # output file will be filename.html $ wget -O filename.html www.abc.xyz$ # output to stdout $ wget -O- www.abc.xyz $ wget -O- https://gist.githubusercontent.com/khanhicetea/4fa9f5103cd7fbc2d2270abce05c9c2b/raw/helloworld.sh | bash#TIL : Checking forced push conflicts on source code in auto testing

Using automated CI solution likes Travis, Jenkins, DroneCI, ... is good solution to ensure quality of software and no breaks in deployment.

Sometimes, developers force push conflicts part to production branch of source code. If the CI tests only backend (python, ruby, php, go, ..) and forget about frontend code, then your application will be exploded !

So checking the conflicts code is required step before testing backend and deployment.

I used

greptool to checking conflicts code in current dirCreate a file name

conflict_detector.shin root dir of source code#!/bin/bash grep -rli --exclude=conflict_detector.sh --exclude-dir={.git,vendor,venv,node_modules} "<<<<<<< HEAD" .Then mini tool print list of conflicted files. If exit code not equal 0 then testing will be failed !

#TIL : Grant user to use sudo without password

This is bad practice but it's kind of hacky thing if you YOLO

# Create a user with home dir and bash shell (if you don't have yet) $ useradd -m YOURUSERNAME -s /bin/bash $ sudo vi /etc/sudoersAdd this line below

root ALL=(ALL:ALL) ALL(User privilege specification section)$ YOUR_USERNAME ALL=(ALL:ALL) NOPASSWD:ALLThen press

:wq!to force saving the file

Enjoy sudo !

#TIL : Cloudflare Error 522 Connection Time out

If you are using Cloudflare as a proxied web server, it will provide many benefits about performance (assets caching, prevent DDOS and cheap CDN). But sometimes, you will face to this error "522 Connection Time out".

The problems caused by :

- Networking (CF can't touch origin server : Firewall blocking, Network Layer #1,#2,#3 issue)

- Timeout (origin server process too long than 90 seconds)

- Empty or invalid response from origin server

- No or big HTTP headers (> 8Kb)

- Failed TCP handshake

Ref:

#TIL : Mysql dumping only table structure

Adding

-Dto dump only data structureExample :

$ mysqldump -h 127.0.0.1 -u root -p"something" -D database1 > db.sql#TIL : Compressing and Extracting files with rar in Linux

zip and tar disadvantages

All unicode filename will be transform to weird character, so it makes broken paths and broken links

Notice

rar and unrar in Linux isn't same version and so don't use unrar to extract archived file by rar (It causes invalid full paths)

Installation

Ubuntu :

$ sudo apt install rarRedhat ( using RPMForge )

$ sudo yum install rarCompressing files, folder

Compressing files

$ rar a result.rar file1 file2 file3 fileNCompressing dir and its subdirs (remember with trailing slash in the end)

$ rar a -r result.rar folder1/Locking RAR file with password (adding

-p"THE_PASSWORD_YOU_WANT")$ rar a -p"0cOP@55w0rD" result.rar file1 file2 file3 fileN $ rar a -p"0cOP@55w0rD" -r result.rar folder1/Extracting file

Listing content of RAR file

$ rar l result.rarExtracting RAR file to current dir

$ rar e result.rarExtracting RAR file to current dir with fullpath

$ rar x result.rarWANT MORE ?

Asking it !

$ rar -?BONUS

WHAT IF I TOLD U THAT A RAR FILE BIGGER 35 TIMES THAN ITS ORIGINAL FILE ?

$ echo 'a' > a.txt $ rar a a.rar a.txt RAR 3.80 Copyright (c) 1993-2008 Alexander Roshal 16 Sep 2008 Shareware version Type RAR -? for help Evaluation copy. Please register. Creating archive a.rar Adding a.txt OK Done $ ls -al total 72 -rw-r--r-- 1 root root 77 May 17 14:18 a.rar -rw-r--r-- 1 root root 2 May 17 14:17 a.txt

Lightning thought #1 : MAGIC !

Random quote

“Insanity: doing the same thing over and over again and expecting different results.” - Albert Einstein

It's true in LOGIC ! But sometimes, it goes wrong in computer science and ... life.

What does computer program do ?

We learnt from Computer Science courses this phisolophy :

PROGRAM takes INPUT and produces OUTPUT

So, same PROGRAM + same INPUT = same OUTPUT

And that's the basis of every testing techniques. We expect specified OUTPUT for the specified INPUT. If not, it fails !

What happens in reality ?

IT's MAGIC !

Sometimes it works, sometime it doesn't ! This is common situation in developer's life and human's life

But, have you ever think the root of it ? Why ? How ? It happened ?

I'm drunk when writing this, but this is my random thoughts :

- Time : of course, time affects everything it touched but I seperate to 2 reasons

- Randomization : any random thing depends on timing. At A, it was X. But at B, it will be Y. So the program or life depends on 1 random thing is unstable, unpredictable and magic !

- Limitation : everything has its limitation, once you go over that, you will be blocked or have to wait.

- Dependencies : anything has dependencies, even NOTHING depends on EVERY dependencies.

- Unavailable : dead, down-time, overloaded

- Break Changes : you need X but dependency has Y

How about human life ?

If you keep doing the same thing but different attitude, magic can happen !

That's why machines can't win human !

Because human is unpredictable !

Ref:

- Images from Googe Search Photos

- Time : of course, time affects everything it touched but I seperate to 2 reasons

#TIL : Basics about sqlite command line tool

We can use

sqlite3command line tool to run SQL statement in sqlite3 file.View all table :

.tablesTruncate table :

delete from [table_name];then runvacuum;to clear spaceClose : press

Ctrl ^ Dto escape$ sqlite3 database.sqlite SQLite version 3.8.10.2 2015-05-20 18:17:19 Enter ".help" for usage hints. sqlite> .tables auth_group backend_church auth_group_permissions backend_masstime auth_permission django_admin_log auth_user django_content_type auth_user_groups django_migrations auth_user_user_permissions django_session backend_area sqlite> select * from auth_user; 1|pbkdf2_sha256$30000$QQSOJMiXmNly$mWUlYwZnaQGsv9UVZcdTb29P7IHrgnd7ja3T/uwFqvw=|2017-03-25 15:06:40.528549|1|||hi@khanhicetea.com|1|1|2017-03-25 15:06:23.822489|admin sqlite> describe auth_user; Error: near "describe": syntax error sqlite> select * from django_session; 4nmyjqpw292bmdnb5oxasi74v9rdhzoc|MzcwZDMxMzk5MGZkZTg2MjY4YWYyNmZiMzRkNWQwOTVjYzczODk5OTp7Il9hdXRoX3VzZXJfaGFzaCI6IjhlZTZjM2NhOGJjNWU4ODU0ZGE3NTYzYmQ4M2FkYzA0MGI4NTI4NzgiLCJfYXV0aF91c2VyX2JhY2tlbmQiOiJkamFuZ28uY29udHJpYi5hdXRoLmJhY2tlbmRzLk1vZGVsQmFja2VuZCIsIl9hdXRoX3VzZXJfaWQiOiIxIn0=|2017-04-08 15:06:40.530786 sqlite> delete from django_session; sqlite> vacuum; sqlite> ^D#TIL : Base 64 encode and decode builtin tool

Browsers have helpers function to encode and decode base64 :

btoa: base64 encodeatob: base64 decode

> btoa('Hello world') "SGVsbG8gV29ybGQgIQ==" > atob('SW4gR29kIFdlIFRydXN0ICE=') "In God We Trust !"#TIL : ab failed responses

When benchmarking a HTTP application server using

abtool, you shouldn't only care about how many requests per second, but percentage of Success responses.A notice that you must have the same content-length in responses, because

abtool will assume response having different content-length fromDocument Length(in ab result) is failed response.Example

Webserver using Flask

from flask import Flask from random import randint app = Flask(__name__) @app.route("/") def hello(): return "Hello" * randint(1,3) if __name__ == "__main__": app.run()Benchmark using ab

$ ab -n 1000 -c 5 http://127.0.0.1:5000/ This is ApacheBench, Version 2.3 <$Revision: 1706008 $> Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/ Licensed to The Apache Software Foundation, http://www.apache.org/ Benchmarking 127.0.0.1 (be patient) Completed 100 requests Completed 200 requests Completed 300 requests Completed 400 requests Completed 500 requests Completed 600 requests Completed 700 requests Completed 800 requests Completed 900 requests Completed 1000 requests Finished 1000 requests Server Software: Werkzeug/0.12.1 Server Hostname: 127.0.0.1 Server Port: 5000 Document Path: / Document Length: 10 bytes Concurrency Level: 5 Time taken for tests: 0.537 seconds Complete requests: 1000 Failed requests: 683 (Connect: 0, Receive: 0, Length: 683, Exceptions: 0) Total transferred: 164620 bytes HTML transferred: 9965 bytes Requests per second: 1862.55 [#/sec] (mean) Time per request: 2.684 [ms] (mean) Time per request: 0.537 [ms] (mean, across all concurrent requests) Transfer rate: 299.43 [Kbytes/sec] received Connection Times (ms) min mean[+/-sd] median max Connect: 0 0 0.0 0 0 Processing: 1 3 0.7 2 11 Waiting: 1 2 0.6 2 11 Total: 1 3 0.7 3 11 WARNING: The median and mean for the processing time are not within a normal deviation These results are probably not that reliable. Percentage of the requests served within a certain time (ms) 50% 3 66% 3 75% 3 80% 3 90% 3 95% 3 98% 5 99% 6 100% 11 (longest request)In this example, first response content-length is 10 ("hello" x 2), so every responses has content length is 5 or 15, will be assumed a failed response.

#TIL : Persistent connection to MySQL

When a PHP process connects to MySQL server, the connection can be persistent if your PHP config has

mysql.allow_persistentormysqli.allow_persistent. (PDO has the attributeATTR_PERSISTENT)$dbh = new PDO('DSN', 'KhanhDepZai', 'QuenMatKhauCMNR', [PDO::ATTR_PERSISTENT => TRUE]);Object destruction

PHP destruct an object automatically when an object lost all its references.

Example code:

<?php $x = null; function klog($x) { echo $x . ' => '; } class A { private $k; function __construct($k) { $this->k = $k; } function b() { klog('[b]'); } function __destruct() { klog("[{$this->k} has been killed]"); } } function c($k) { return new A($k); } function d() { c('d')->b(); } function e() { global $x; $x = c('e'); $x->b(); klog('[e]'); } function f() { klog('[f]'); } d(); e(); f();Result:

[b] => [d has been killed] => [b] => [e] => [f] => [e has been killed] =>Reducing PDO persistent connections in PHP long-run process (connect to multiples databases)

Instead of using a service object, we should use a factory design pattern for each job (each connection). PHP will close MySQL connection because it destructs object PDO. Then we can reduce the number of connections to MySQL at a same time.

I learned this case when implement a web-consumer (long-run process) to run database migration for multiples databases.

Before fixing this, our MySQL server had been crashed because of a huge opened connections.

Now, everything works like a charm !

#TIL : Using VarDumper in PHPUnit

The trick is writing the output to STDERR stream, I wrote a helper function below

function phpunit_dump() { $cloner = new \Symfony\Component\VarDumper\Cloner\VarCloner(); $dumper = new \Symfony\Component\VarDumper\Dumper\CliDumper(STDERR); foreach (func_get_args() as $var) { $dumper->dump($cloner->cloneVar($var)); } }How to use it ?

// Something magic here :D phpunit_dump($magic_var1, $magic_var2, $magic_of_magic); // So much magic below, can't understand anymore

#TIL : UNION vs UNION ALL

The difference is UNION command will sort and remove duplicated rows (RETURNED ONLY DISTINCT ROWS)

Examples :

mysql> select '1', '2' union select '2', '1' union select '3', '4' union select '1', '2'; +---+---+ | 1 | 2 | +---+---+ | 1 | 2 | | 2 | 1 | | 3 | 4 | +---+---+ 3 rows in set (0.00 sec) mysql> select '1', '2' union select '2', '1' union select '3', '4' union select '1', '3'; +---+---+ | 1 | 2 | +---+---+ | 1 | 2 | | 2 | 1 | | 3 | 4 | | 1 | 3 | +---+---+ 4 rows in set (0.00 sec) mysql> select '1', '2' union all select '2', '1' union all select '3', '4' union all select '1', '2'; +---+---+ | 1 | 2 | +---+---+ | 1 | 2 | | 2 | 1 | | 3 | 4 | | 1 | 2 | +---+---+ 4 rows in set (0.00 sec)Tips

In case there will be no duplicates, using UNION ALL will tell the server to skip that (useless, expensive) step.

#TIL : Random quote 23 Feb 2017

A computer lets you make more mistakes faster than any other invention in human history, with the possible exceptions of handguns and tequila. - Mitch Ratcliffe

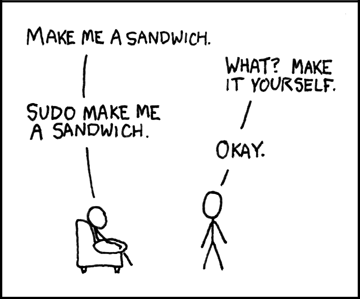

Haha, it's totally true ! And it makes me remember this meme when I was little bit drunk !

#TIL : String problems can cause logical bugs in application

Example table

mysql> describe `test`; +------------+-------------+------+-----+-------------------+----------------+ | Field | Type | Null | Key | Default | Extra | +------------+-------------+------+-----+-------------------+----------------+ | id | smallint(6) | NO | PRI | NULL | auto_increment | | name | varchar(50) | NO | | NULL | | | created_at | timestamp | YES | MUL | CURRENT_TIMESTAMP | | +------------+-------------+------+-----+-------------------+----------------+ 3 rows in set (0.00 sec)Here is dump file of the table

DROP TABLE IF EXISTS `test`; CREATE TABLE `test` ( `id` smallint(6) NOT NULL AUTO_INCREMENT, `name` varchar(50) COLLATE utf8_unicode_ci NOT NULL, `created_at` timestamp NULL DEFAULT CURRENT_TIMESTAMP, PRIMARY KEY (`id`), KEY `created_at` (`created_at`) ) ENGINE=InnoDB DEFAULT CHARSET=utf8 COLLATE=utf8_unicode_ci; LOCK TABLES `test` WRITE; /*!40000 ALTER TABLE `test` DISABLE KEYS */; INSERT INTO `test` (`id`, `name`, `created_at`) VALUES (1,'abc','2017-02-16 17:28:59'), (2,' ABC','2017-02-16 17:29:14'), (3,'ABC ','2017-02-16 17:29:21'), (4,'aBc','2017-02-16 17:29:31'); /*!40000 ALTER TABLE `test` ENABLE KEYS */; UNLOCK TABLES;Case Insensitive

When creating database, table and column, we have to set the default string COLLATION for them. And if we use collation end withs

_ci, it means we ignore the Case Sensitive (or Case Insensitive).Then

"abc" == "ABC"or"abc" == "aBc"or"abc" = "Abc"or ... (X = Y <=> UPPER(X) == UPPER(Y))mysql> select ("abc" = "ABC") as `case1`, ("abc" = "aBc") as `case2`, ("abc" = "Abc") as `case3`, ("abc" = "abcd") as `wrong`; +-------+-------+-------+-------+ | case1 | case2 | case3 | wrong | +-------+-------+-------+-------+ | 1 | 1 | 1 | 0 | +-------+-------+-------+-------+ 1 row in set (0.00 sec)String Trimming

Check this out !

mysql> select `id`, CONCAT("'", `name`, "'") as `name_with_quote`, `created_at` from test; +----+-----------------+---------------------+ | id | name_with_quote | created_at | +----+-----------------+---------------------+ | 1 | 'abc' | 2017-02-16 17:28:59 | | 2 | ' ABC' | 2017-02-16 17:29:14 | | 3 | 'ABC ' | 2017-02-16 17:29:21 | | 4 | 'aBc' | 2017-02-16 17:29:31 | +----+-----------------+---------------------+ 4 rows in set (0.00 sec) mysql> select `id`, CONCAT("'", `name`, "'") as `name_with_quote`, `created_at` from test where `name` = 'abc'; +----+-----------------+---------------------+ | id | name_with_quote | created_at | +----+-----------------+---------------------+ | 1 | 'abc' | 2017-02-16 17:28:59 | | 3 | 'ABC ' | 2017-02-16 17:29:21 | | 4 | 'aBc' | 2017-02-16 17:29:31 | +----+-----------------+---------------------+ 3 rows in set (0.01 sec) mysql> select `id`, CONCAT("'", `name`, "'") as `name_with_quote`, `created_at` from test where `name` = ' abc'; +----+-----------------+---------------------+ | id | name_with_quote | created_at | +----+-----------------+---------------------+ | 2 | ' ABC' | 2017-02-16 17:29:14 | +----+-----------------+---------------------+ 1 row in set (0.00 sec)BAAMMMMM !!!

MySQL do right trimming the string value before comparing.

So you must be becareful to trim value before storing to MySQL to make everything consistent.

Storing Emoji in MySQL

Because Emoji use utf-8 4 bytes, so we must use the

utf8mb4charset (utf8 multi-bytes 4).It's safe when migrating charset from

utf8toutf8mb4😎 , but not the reverse way 😅#TIL : Stats your top-10 frequently commands

Run this command, it will show top-10 frequently commands, explain shell

$ history | awk '{print $2}' | sort | uniq -c | sort -nr | headExample result

2064 git 1284 ls 826 cd 700 ssh 602 clear 491 python 473 exit 341 vagrant 242 export 167 ping#TIL : Bash shell shortcuts

- Ctrl + e : jump cursor to EOL

- Ctrl + a : jump cursor to BOL (beginning of line)

- Ctrl + u : delete all from cursor to BOL

- Ctrl + k : delete all from cursor to EOL

- Ctrl + r : search history, press again for next search

- Ctrl + l : clear shell screen

- Ctrl + c : terminate the command (sometimes have to press twice)

- Ctrl + z : suspend the command, back to shell. Run

fgto resume the command

#TIL : F-cking stupid limit of input vars

Today, I tried to debug many hours to find out why my POST request missing some data (specify

_tokenhidden field). :disappointed:I tried to config NGINX and PHPFPM max_post_size, client_max_body_size but they still gone. After 2-3 hours searching on Google, I found the link from PHP.net,

it has a config value about limiting max input vars (default = 1000), so it causes the problem about missing data.So I changed

max_input_vars = 9999in myphp.iniand everything works like a charm. :smiley:At least, I had a luck cos it doesn't run my POST request when missing the CSRF token :grin: My data is save !!!

#TIL : Commands

Command

lsofList all opened files, sockets, pipes

Eg:

- List processes are using port 80 (need root if port between 1-1023)

# sudo lsof -i:80- List processes are using /bin/bash

# lsof /bin/bash